Hyperparameter theft? Let me explain.

Hyperparameters are algorithm selections that exist outside your machine learning (ML) algorithm and therefore can’t be learned by it. But they’re integral to the performance of that algorithm.

As a result, humans spend plenty of time, effort and resources perfecting their hyperparameters. And due to their influence over ML outcomes, these hyperparameters become valuable trade secrets.

Unfortunately, nefarious people can use machine learning to help “steal” those hyperparameters. This “hyperparameter theft” raises new questions about security in the field of ML and AI.

It’s important to keep them safe and secure. So, let’s explore the role of hyperparameters, and how they can fall into enemy hands.

What’s a hyperparameter?

Put simply, a hyperparameter defines your ML algorithm’s parameters. Its value is set prior to the learning process, in contrast to parameters, which are unearthed through the training of an AI.

You can think of a hyperparameter like a knob on a machine. You dial it up or down to “tune” how your ML algorithm should work, then you start your training. Hyperparameters include things like batching, momentum, learning rate and network size.

How you define your hyperparameters can affect the time needed to train and test a model on the same dataset. For example, scaling the learning rate or the network size can have a significant effect on performance.

It’s a bit like baking a cake. Your parameters are the ingredients, while your hyperparameters are the settings on your oven. Baking the same ingredients at 600 degrees for 2 minutes results in a very different outcome than baking them at 300 degrees for 35 minutes.

Why are hyperparameters a big deal?

Hyperparameters shape the performance and outcomes of your training model. Different hyperparameters result in different performance – and often become closely guarded trade secrets. Remember the Pepsi taste test? Pepsi and Coke might both be colas, but they’re not the same product.

Similarly, there are other sentiment analysis companies out there. But their machine learning products aren’t the same as ours. That’s because we all have our own proprietary “secret sauce” hyperparameters that shape our products – take our Lexalytics (an InMoment company) AI Assembler, for example.

The right “tuning” of hyperparameters is essential when purchasing an ML product from a company or building your own.

It’s not just about us – it’s about you

Pepsi and Coke keep their recipes safe because their brands are built on them – and they’ve spent a lifetime perfecting and developing them. AI companies keep their hyperparameters confidential for the same reason. Hyperparameters have real commercial value.

The thing about Lexalytics technology is that it doesn’t end with us. Our software allows clients to develop their own hyperparameters to suit the dimensions of their own space. Say you’re a hotel chain and you define your own hyperparameters for your industry. The resulting models can give you an edge over your competitors – so you’ll want to keep them protected.

Which leads us to hyperparameter theft.

Can you even steal a hyperparameter?

Okay, so it’s feasible that someone could steal a recipe (or your oven presets). But how do you steal a hyperparameter?

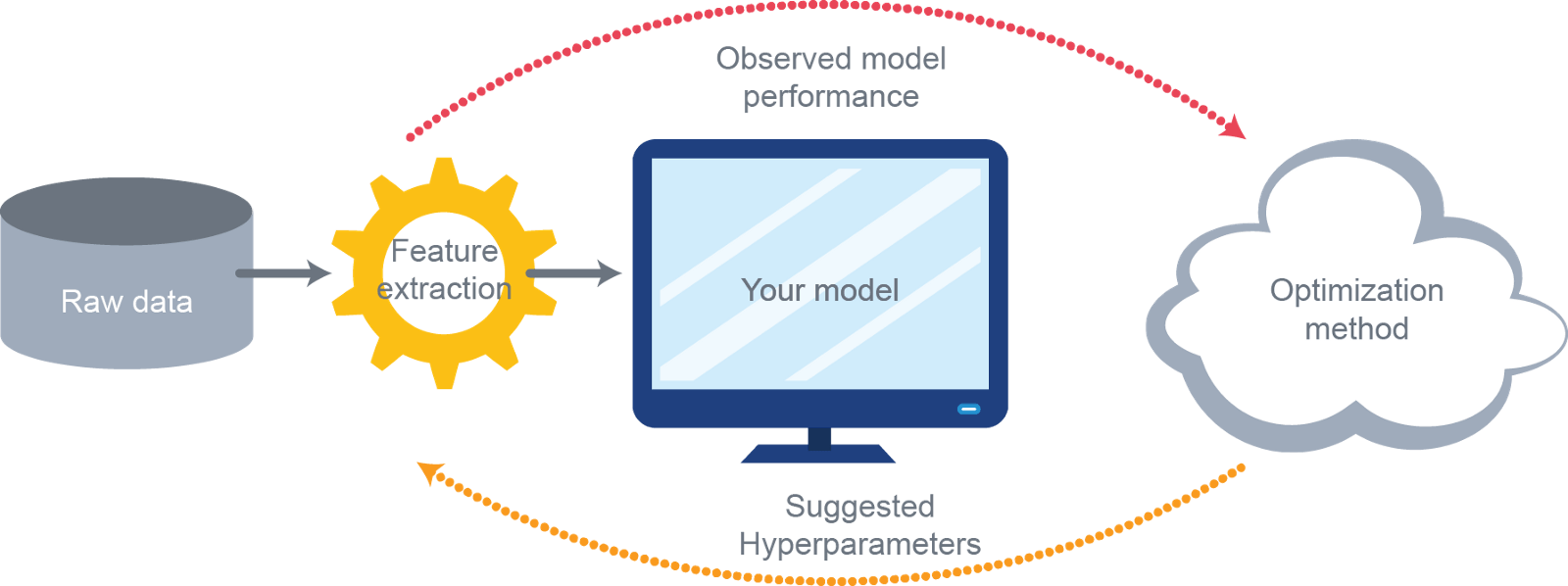

Well, one way is with AI. Say your attacker (a computer) knows your dataset and your ML algorithm. They can then build their own model to “learn” your model parameters by sampling some of the training dataset. Next up, they can steal the hyperparameters via attacks. Now they have everything they need to recreate your work – or (arguably) worse, embark upon a retraining attack.

Hyperparameters can be determined and stolen where parameters are known – and even when they’re not known. While certain hyperparameters, such as those for Ridge Regression and Kernel Ridge Regression, are more accurately computed than others, all hyperparameters can be algorithmically determined.

How can we keep our hyperparameters safe?

There are countermeasures available for defending against such attacks, such as rounding model parameters, using ensemble methods, employing differentially private ML functions (to create “noise”), or using prediction API minimization.

However, there’s a clear need to advance security around hyperparameters. This will become more pressing as use cases move closer to government and security – and as “attacking” ML algorithms become more sophisticated.