Background

Over the past couple weeks, I’ve been working on theme extraction to analyze the Presidential (and Vice Presidential) debates. Theme extraction is a straight-forward NLP process using part-of-speech patterns and a scoring technique called “lexical chaining”. Although theme extraction cuts through the fluff to show the most lexically significant sentence fragments, it still requires subjective assessment to determine which candidate was closer to the subject of the moderator’s question.

To get to a quantifiable measure of similarity, I’ve used another component from our linguistic toolbox, the Concept Matrix™. The Concept Matrix is a machine learning model derived from Wikipedia content that contents millions of connections between related words. In our Salience text analytics engine, it allows us to make conceptual matches between document content and topics of interest to clients, without the need for lengthy queries. Effectively, it allows for a measure of similarity.

The themes extracted from the candidate responses are only sentence fragments, as such they lack a lot of context to help with a comparison of similarity. However, they do serve as a beacon to the most significant sentences in each candidate’s response. So if we string together the complete sentences which the highest scoring themes appear in, we have a representative summary of the key statements in the candidate’s response, which can be compared to the original question.

Debate #1

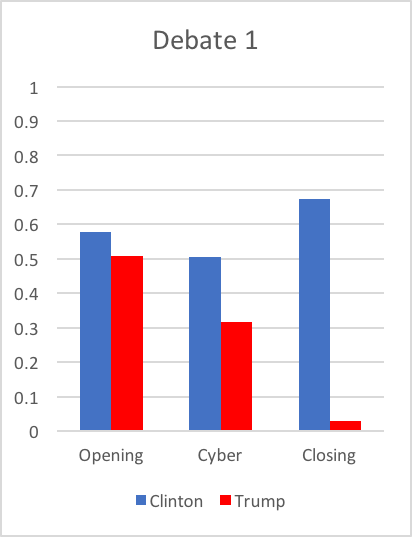

Themes were extracted from three different questions asked of Hillary Clinton and Donald Trump from the first Presidential Debate; the opening question related to jobs and the economy (“Achieving Prosperity”), a question on cybersecurity (“Securing America”), and the closing question in which Lester Holt asked the  candidates if they would respect the outcome of the election.

candidates if they would respect the outcome of the election.

This was the first time we ever tried this, and the above chart provides a good example of what this analysis “looks” like in practical terms. The y-axis in the charts above and below measure the candidates’ answers against the content of the moderator’s question.

The first question in the first debate was very broad, and allowed both candidates to essentially answer with parts of their “stump” speeches they give on the campaign trail. So you can see that both of those answers were relevant to the moderator’s question, with Clinton’s answer being slightly more germane to the question than Trump’s.

The second question analyzed was the cyber-security question, and provides a good example as to why I cut the answers down to just the most relevant sentences. In Trump’s answer, he repeated the word “cyber” a number of times. This artificially inflated his score, showing his answer as more relevant to the question than it actually was. The same thing happens with Clinton’s answers, too.

The final question is a good example of what happens when one person answers the question asked and another “filibusters,” or says a lot without really addressing the question asked. In this response, about whether or not they would accept the results of the election, Clinton answered the question pretty explicitly. Trump, on the other hand, didn’t really answer it all.

Debate #2

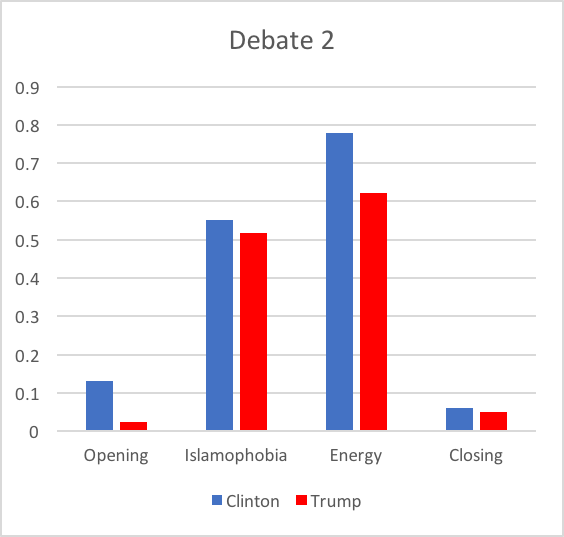

Themes were extracted from four different questions in the second Presidential Debate; the opening question about the candidates modelling appropriate behavior, a question from an audience member on the rise of Islamophobia in America, a question from an audience member on each candidate’s energy policy, and a closing question asking each candidate to state a positive aspect about their opponent.

With the analysis of the first debate to guide us, we are able to evaluate the answers given by the candidate in the second debate. It’s worth remembering we’re not evaluating the philosophical substance of the debate, but rather how on-topic their answers are.

With the analysis of the first debate to guide us, we are able to evaluate the answers given by the candidate in the second debate. It’s worth remembering we’re not evaluating the philosophical substance of the debate, but rather how on-topic their answers are.

In the question asked about Islamophobia, both candidates answered the question with similar levels of relevance. The key statements we analyzed were focused on the topic of the question, even though Clinton’s and Trump’s approach to the issue are markedly different.

The same is true for the question about energy policy (asked by Twitter’s favorite questioner: Ken Bone), which touched on two key elements of the issue: environmental and economic impact. Yet, Clinton’s answer was significantly more on-topic than Trump’s, mostly because her answer was “solution” oriented, while her opponent only really outlined the problems.

The first and last question are interesting ones, because they asked very subjective questions. Both asked them about positivity—being a positive role model and to say something nice about the other—is a conceptual thing that’s difficult to linguistically score.

Clinton’s answer is scored slightly higher, but that’s because she spoke optimistically about the election as a whole, through the framework of praising Trump’s children. Trump’s answer, calling Clinton a “fighter” could (in print) be interpreted as a complaint about her character as much as it could be taken as a compliment.

Again, this project is still getting on its feet. Yet, from these results we can see that this is a useful tool in order to evaluate if policy statements by politicians and elected officials are actually about the topics they’re supposed to be.

We’ll be running another analysis for this next debate, so watch this space for those results.